Having your pages and posts rank high on search engines means you’re implementing good SEO practices. That’s generally good, but sometimes it can be the opposite of what you want. That’s because you don’t want search engines to index certain pages or posts and present them to searchers on results.

This article explores four methods to noindex posts or pages in WordPress and prevent them from appearing on search results.

What Is “noindex”?

noindex is an HTML tag that tells search engine robots (“crawlers”) not to index a particular web page, preventing it from appearing in search results. For some context, consider that search engines have three primary functions: crawling, indexing, and ranking.

- Crawling is scouring the internet, going through the code and content of every URL that search engine crawlers can find. A “crawler” or “web spider” is a program search engines use to crawl pages.

- Indexing is storing and organizing the content scoured during the crawling process.

- Ranking is providing the most relevant content for the searcher’s query; what you see on search results is what the search engine ranked from most to least relevant for your query.

When a web page is indexed (added to the index, an extensive database of crawled pages), it competes with other pages to rank in search results for specific keywords. What the noindex tag does is prevent that page from being added to the index and thus prevent it from appearing in search results.

Why Would You Want to noindex a Page?

Although it seems counterintuitive at first, there are cases where you’ll want to prevent search engines from indexing specific pages from your website.

One of the most common reasons to exclude pages from indexing is because they’re unrelated to the keywords your website targets.

For example, if your website ranks high on search results for a specific subject like WordPress development, you may want to exclude pages that don’t contribute to search engine rankings for the keywords related to the topic.

One common case would be “Thank you” pages visitors land on after joining your newsletter, subscribing to a service, or buying merchandise. Even if it’s a good “Thank you” page, indexing it may drop your site’s keyword density for the searches you want to rank on.

Other cases where you might want to apply the noindex tag include the following:

- “Members Only” pages that users can only access after submitting their contact information.

- Printer-friendly versions of pages, which search engines may detect as duplicate content and penalize your site for it.

- Author archives on blogs where there’s only one author, as the archives may be tagged as duplicate content.

- Admin and login pages.

- Pages that are still under construction.

- Internal search results.

- Category and tag pages that are taking traffic away from valuable content.

- Staging environments.

- Password-protected pages.

4 Methods to noindex a Page in WordPress

There are multiple ways to noindex a page in WordPress. We’ve included the 4 methods that we consider the easiest, quickest, and most user friendly.

Method #1: noindex a Page or Post With Yoast SEO’s “Advanced” Settings

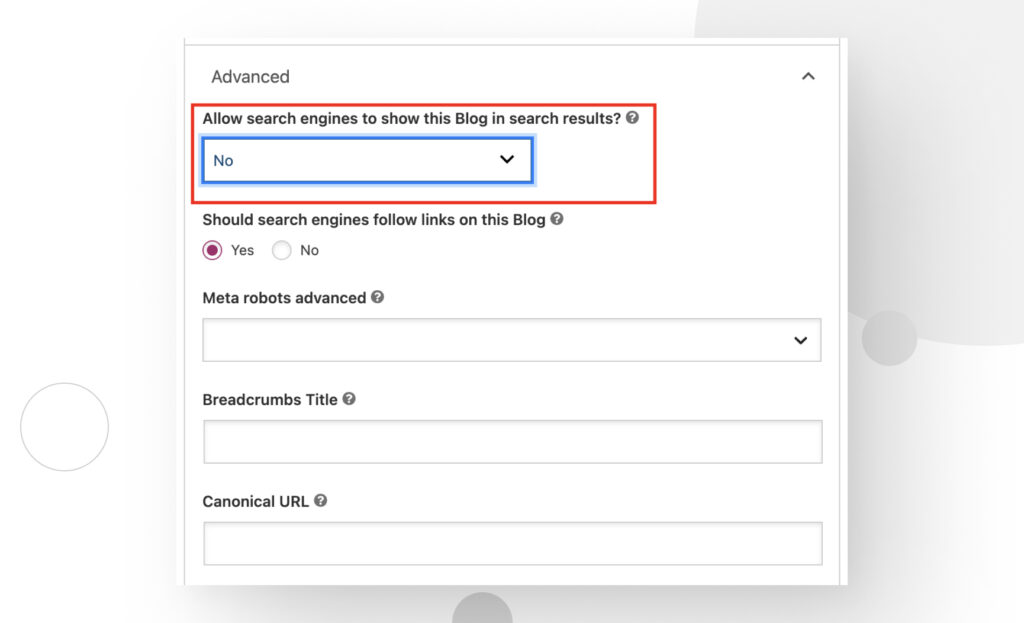

You can use the Yoast SEO plugin to apply the noindex tag. Given its popularity, it’s probably the easiest and most user-friendly method. To apply a noindex tag using Yoast SEO settings, go to the post or page you want to keep off search results, scroll down, and click the Advanced tab.

You probably also noticed the “Should search engines follow links on this Blog?” option. Most of the time, you’ll want to choose “Yes,” but in some cases, like posts and pages that contain links in the comments or from affiliate brands, you may prefer choosing “No.” This is because sponsored content and user-submitted links can hurt your site’s SEO.

Method #2: noindex a Page or Post With the robots.txt File

robots.txt is a text file in your WordPress website’s root folder. From the robots.txt file, you can issue commands that tell search engine crawlers which of your site’s resources they can access.

To access the robots.txt file, you can connect to your website via FTP using FileZilla or a similar app, use cPanel’s File Manager, or use Yoast SEO by going to SEO > Tools and clicking on File Editor in the following screen. Regardless of the method, you’ll need to input a single line of code:

Disallow: /URL-for-your-page/While this method works, it’s not ideal for reasons we’ll explain in a section below.

Method #3: noindex a Page or Post With an HTML Meta Tag

As explained at the start of the article, noindex is an HTML meta tag. As such, you can insert a line of code to your post or page’s HTML file to achieve the same results as the Yoast SEO method. Input the following code into the file’s <head> section:

<meta name="robots" content="noindex" />For this method to work, you can’t use it in conjunction with the robots.txt method, as it will supersede the HTML meta tag and prevent crawlers from ever parsing it.

Method #4: Use the “noindex” Directive on the HTTP Response Header

Finally, this method is slightly more advanced but achieves the same results. You need to add the X-Robots-Tag and add this HTTP response in the head of the header.php file:

header("X-Robots-Tag: noindex", true);Keep in mind that using this method adds the noindex tag to all pages and posts, not just specific ones unless you add extra code to specify. For this reason, it’s generally not the recommended method unless that’s exactly what you’re looking for.

Factors That Influence the “noindex” Tag

There are a few factors that influence the effectiveness of the noindex tag.

“Disallow” robots.txt Directive

One of the methods explained above involved using the Disallow directive in robots.txt. While this method works, it may also not, depending on several factors.

One case where it won’t work is if you have internal links from other posts or pages on your site that lead to the URL specified in the Disallow directive. Links from external pages that also lead to that URL have the same effect.

In both cases, search engines may find that URL and still index that page, despite the directive. For that reason, the Disallow directive is the least reliable method to use.

Finally, using the Disallow directive in addition to the noindex tag prevents the noindex tag from being effective because crawlers will parse the robots.txt file first and never parse the contents of the URL assigned to the Disallow directive.

“noindex” robots.txt Directive

The robots.txt file also supports a noindex directive, which you can use to unindex a page, pages, or entire folders of your site. However, this directive is also unreliable, and it’s best to avoid it.

“nofollow” HTML Meta Tag

The nofollow HTML tag is related to the “Should search engines follow links on this Blog?” option from the Yoast SEO method. When you apply the noindex HTML to a page through whichever method you prefer, you’re still allowing search engines to crawl it. In the process of crawling it, they’ll explore the links contained in your page.

In some cases, you may want to prevent search engines from following those links since they may lead to websites you can’t vouch for (for example, links left by comment section bots). If search engines determine some of those links are malicious or incur black hat SEO techniques, they may penalize your site.

To prevent this, you can add the nofollow tag to specific links you don’t vouch for or select “No” on the “Should search engines follow links on this Blog?” to prevent search engines from any link in your post.

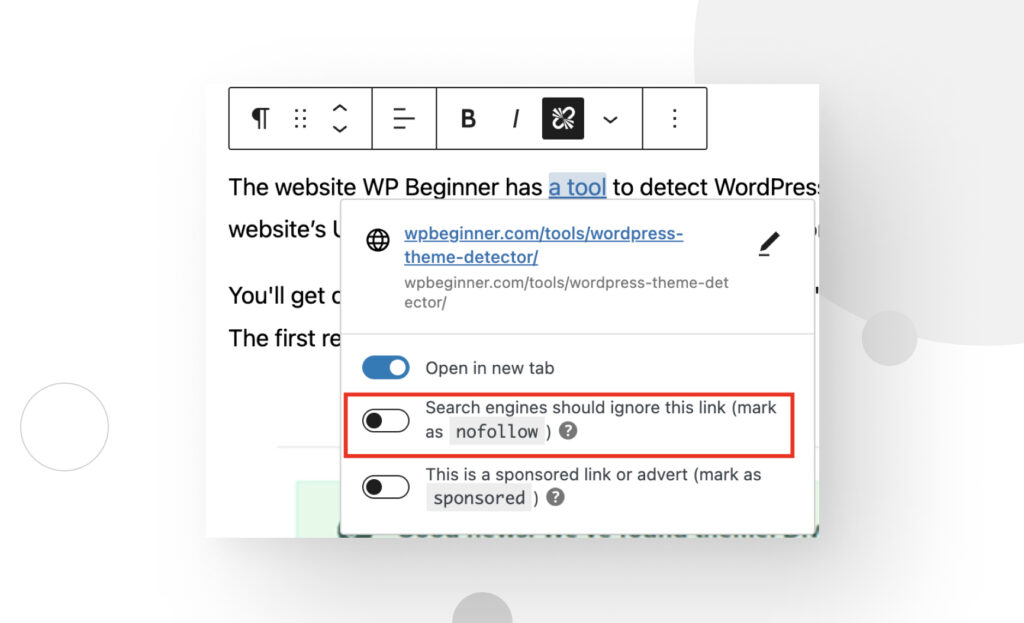

WordPress allows you to apply the nofollow tag without editing code. To do it, click on a link in the editor and then check “Search engines should ignore this link (mark as nofollow).”

There Are Multiple Ways to noindex Pages in WordPress

As we’ve seen, there are multiple ways to noindex a web page. Most are equally effective, except for the robots.txt option, which tends to be unreliable for several reasons. Also, editing header.php affects all pages and posts by default, so you need to add extra code to prevent that.

Overall, Yoast SEO is the easiest and most reliable method since it’s quick, simple, and effective.

Keep in mind that these methods take time to work because web spiders will likely need to crawl your page once more to detect the noindex tag and keep your page off search results.

Frequently Asked Questions (FAQs)

Should I noindex White Paper Pages?

You should only noindex white paper pages if the white paper is not yours. If you’re hosting a third-party white paper as documentation that your users can refer to, leave the white paper but use the noindex tag on it. Since the original author is likely already hosting the original version on their site, your copy is unlikely to get traffic. If the white paper is yours, you should leave it indexed.

Related Articles

WordPress SEO / 3 min read

WordPress SEO / 3 min read

How to Disable Google Indexing on a WordPress Site

Whether you want your invite-only website to stay private or you’re testing your web development skills and want to avoid traffic, there are various reasons for wanting to disable Google…

Read More

WordPress SEO / 12 min read

WordPress SEO / 12 min read

What Is a WordPress SEO Consultant?

A WordPress SEO consultant is a WordPress agency that specializes in optimizing your website's search engine optimization to help you drive more organic traffic to your site and become more…

Read More

How to... / 11 min read

How to... / 11 min read

How to Set Up and Use Link Whisper in WordPress

Link Whisper is a WordPress plugin that makes building internal links easier and faster by providing AI-powered suggestions based on a site-wide scan of your content. In this article, we’ll…

Read More

How to... / 6 min read

How to... / 6 min read

How to Manage Noindex Tags in WordPress with Yoast SEO

Managing noindex tags in WordPress is one of the most common SEO adjustments made by admins, owners, and developers. While it is possible to edit your site's HTML to add…

Read More

How to... / 10 min read

How to... / 10 min read

Yoast SEO for headless WordPress: how to set up with GraphQL and Next.js

As many headless WordPress developers know, improving your frontend site’s SEO is very challenging when using certain frontend frameworks, such as React. But in this day and age, SEO is…

Read More